It seems that AI is going to change everything, and it already has changed a lot. From routine tasks to something so tremendous that you will normally need a team, machines allow us to reach our human goals faster.

Data collection is no exception. And here we are to tell you how and what role the Data365 API takes here.

Overview:

- For AI, data collection is a part of the cycle, since it needs it itself.

- AI can look for the data in the places that used to be out of reach or too chaotic to understand, and still can make heads and tails of it.

- With AI, data collection gets tied to analysis automatically.

This guide breaks down how data collection really works today – what’s changed, what’s improving, and what you actually need to pay attention to in 2026.

What “Data Collection” Means Today

Data and how we perceive it have changed over time. New metrics entered the game, so when we talk about “data collection” in the 2020s, we should understand that it covers an entire universe of signals, behaviors, clicks, swipes, camera feeds, and sensor readings that never sleep, a bit like the Eye of Sauron, but hopefully less ominous. What was beyond reach once is now as normal as morning coffee.

Today, data comes in every shape and flavor. You have structured data, perfect rows, perfect columns. Then comes unstructured data, which is basically everyone else: photos, videos, messages, voice notes, memes. And now there’s real-time data, streaming in so fast it makes you feel like you’re trying to drink from a fire hose.

AI systems thrive on all of it. They watch, listen, and learn from millions of micro-interactions: a smartwatch, fridge, search engines, etc. Businesses use all these digital breadcrumbs to understand trends, forecast needs, and sometimes just figure out why everyone suddenly started buying air fryers (apparently, there’s no answer yet for this one).

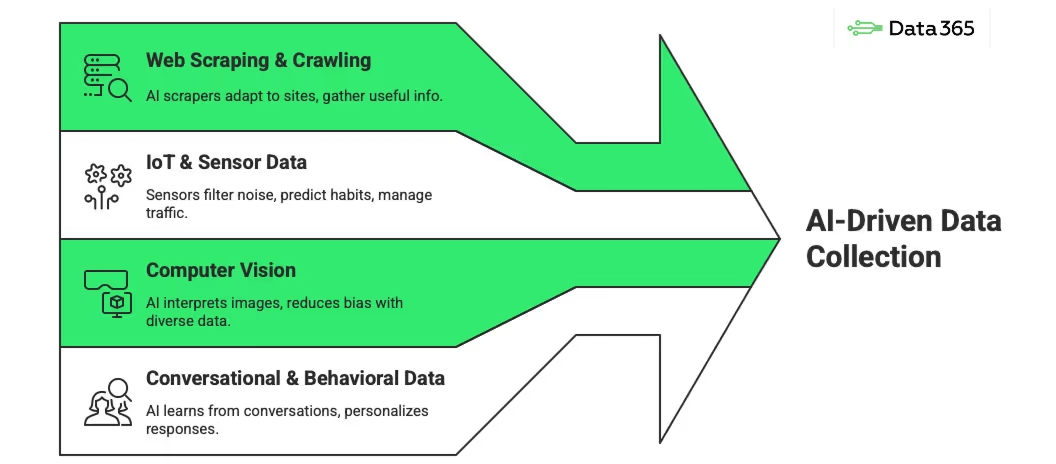

AI-Powered Methods of Data Collection

Instead of people manually sifting through information, machines now spot patterns faster than you can say, “Easy peasy lemon squeezy.”

Automated Web Scraping & Crawling

It’s like sending out a swarm of extremely polite, extremely fast librarians who zip around the internet collecting facts. Traditional scrapers follow rigid rules; AI-powered ones can improvise a bit.

They can recognize layouts, adapt when a website changes, and pick out useful bits even when everything looks like a digital spaghetti bowl. Companies use them for market research, competitive insights, and sometimes just to figure out why another brand suddenly became the “main character” online.

IoT & Sensor-Based Data Collection

The Internet of Things is basically the world’s largest group chat, except everyone’s talking in numbers. Sensors in cars, thermostats, factory machines, and even toothbrushes send data constantly (those little spies know when you’re too lazy to brush your teeth for the required two minutes. Let’s hope they won’t snitch to your dentist).

Computer Vision & Image-Based Data Collection

Computer vision is when AI watches and starts noticing everything – objects, faces, text, road signs, even the plant you forgot to water. Modern systems don’t just “look”; they interpret. Powered by deep learning (convolutional neural networks (CNNs) being the longtime MVPs and Vision Transformers the trendy newcomers), machines can classify images, read characters, segment scenes, and spot patterns faster than your phone recognizes your face before you’re fully awake.

But all this magic depends on data – lots of it. Image-based collection now pulls visuals from everywhere. And because not every category has thousands of perfect examples (rare objects aren’t exactly lining up for photo shoots), AI helps out by generating synthetic images with GANs.

Conversational & Behavioral Data Collection

Conversational and behavioral data collection is where AI learns by talking – and by quietly watching how users actually behave. Every chat message, voice prompt, and hesitation before clicking “Buy now” becomes training material.

AI gathers this data in a few ways:

- Human-to-Machine (H2M) is the everyday method: early chatbots interact with real people, collect all the messy, delightful human input, and learn from it.

- Machine-to-Machine (M2M) speeds things up by letting simulated users generate huge conversation patterns that humans later polish.

- And Human-to-Human (H2H) data – real dialogues between people – still helps AI learn natural phrasing, though it’s slower and pricier to collect.

AI-Powered Data Collection Tools for Users

There are many approaches, so that you can build a routine depending on your needs and capacity:

- AI-driven form and survey builders are a great place to start. They adapt to answers on the fly, switch formats when needed, and even accept files, ratings, payments, or geolocation. Bonus: built-in AI analytics instantly highlight patterns, saving you from deciphering bar charts.

- For larger-scale digging, AI-enabled web scrapers and APIs can go (even run) through mountains of structured and unstructured content (reviews, transactions, comments, you name it) without getting lost in a maze of pop-ups and cookies.

- When the job needs a human touch, AI-coordinated crowdsourcing steps in. Instead of micromanaging hundreds of contributors, AI distributes tasks, checks their quality, and flags anything suspicious. Think of it as having a project manager who works at superhuman speed and never forgets to follow up.

- And because messy data is as inevitable as unanswered emails, AI-powered validation and cleaning happen in real time. Algorithms catch missing fields, odd entries, or contradictory answers the moment they appear, long before they get a chance to mess up the whole dashboard.

Standard Data Retrieval Tools VS AI Data Collection

Traditional data tools were built for order. Give them structured tables, predictable schemas, and clean APIs, and they deliver every time. If your data behaves, these systems feel unstoppable: no layout changes, no guesswork, no broken scripts. Just reliable responses, exactly as expected.

AI data collection steps in when the world gets messy. Images, videos, social posts, shifting HTML – things that refuse to fit into neat rows. These tools can adapt, read context, and pull meaning from unstructured pages the way a human would. Instead of following rules, AI learns patterns and adjusts when the source changes.

In real workflows, the strongest setups use both. APIs keep the foundation clean and dependable. AI fills in the gaps where structure disappears. Together, they make data collection feel less like maintenance, and more like momentum.

Data365: When Your AI Needs Data to Grow

AI needs data to be such a great remedy to everything. The more “human” the data is, the better your AI understands our world. So, data from social media platforms (the more of them, the better) is the perfect studying material.

A Social Media API like Data365 brings a bunch of networks together under one umbrella and returns posts, comments, timestamps, reactions, and other publicly available bits in clean, predictable JSON you can actually build things with.

Everything arrives in a clear hierarchy, so following a conversation thread doesn’t feel like trying to untangle a group chat from screenshots. Deduplication keeps reshared content from looping back like a déjà-vu glitch, and the high uptime plus asynchronous workflow means the system doesn’t tap out when you push it.

Once the data lands, it plugs neatly into whatever you're using – Tableau, Power BI, Python notebooks, or your ML pipelines – your dashboards suddenly get a sharper pair of glasses.

If this sounds like the kind of order you want in your data life, drop us a message to test our Social Media API.

Advantages and Risks of AI-Driven Data Collection

Ethical Data Collection in the AI Era

As soon as data collection in the age of AI gets faster and smarter, one question becomes impossible to ignore: should everything that can be collected actually be collected? That’s why ethical AI data collection deserves more attention.

%201.avif)

Collecting data ethically is the main dilemma and challenge. In a perfect world, it would mean treating information like something alive – respecting it, understanding it, and not letting it run wild. However, since AI data collection is still new for us, people of the Internet, there are many things to consider before starting.

1. Transparency and explainability

People who use the service should know what information is being collected, why, and who can see it. It's less “magic” and more “trustworthy sidekick” if your AI can explain what it's doing in plain English instead of gibberish. It's like giving people the subtitles to the way your brain works.

2. User consent and fair usage

It's not enough to just click “I agree” and scroll down like you're watching a TikTok video. Users really know what they're getting into when they give their consent, and they can back out if they change their mind. Fair use means that the data isn't being used for things the user didn't agree to.

3. Building responsible data pipelines

The only thing that makes a data pipeline good is how much it avoids. Collect, clean, store, and process it, but don't think of it as a mystery box. Keep an eye on sensitive information, check for mistakes, and keep records so nothing goes unnoticed.

4. Data minimization and anonymization

Take only what’s necessary and remove personal details whenever you can. Gathering too much data is like overpacking for a two-day trip – bulky, pointless, and irritating. Anonymization adds a safety layer: the data keeps its story, just without exposing names or sensitive bits.

Conclusion

We’ve reached a point where data collection isn’t just a backstage task anymore – it’s the fuel, the engine, and sometimes even the spark behind modern AI. What used to take teams, tools, and too many spreadsheets now happens faster, cleaner, and far more intelligently.

But none of it works without reliable foundations. That’s where APIs, structured datasets, and clean pipelines come in. They give AI the stability it depends on, while AI brings the flexibility they never had. Together, they reshape what “data collection” means today.

If there’s one takeaway from the AI era, it’s this: the future belongs to teams who mix precision with adaptability, structure with interpretation, rules with learning. And if you plan to build anything meaningful – a model, a dashboard, a product, or a business – you’ll need both.

So as the landscape gets richer (and louder), the smart move is to work with tools that can handle the noise without losing the signal. That’s where a unified, reliable API like Data365 steps in: it gives you the order AI needs to grow, and the clarity you need to build with confidence. Contact us today and get your dashboards thinking smarter, not harder.

Extract data from five social media networks with Data365 API

Request a free 14-day trial and get 20+ data types

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)